Why build from scratch when the garden already has what you need to grow?

As Generative AI continues to transform industries, one platform stands out by making powerful models accessible, customizable, and cost-efficient i.e. Vertex AI Model Garden.

While the spotlight often lands on fine-tuning and custom build approach of Model Garden but it is much more than just a fine-tuning tool. It's a launchpad for discovering, deploying, customizing, and operationalizing foundation models at scale — even with minimal ML expertise.

This blog will walk you through:

What is Vertex AI Model Garden?

The role of fine-tuning and how it works

Model discovery, deployment, and prompt design

MLOps integrations and cost optimizations

Use cases across industries

Visual diagram of the Model Garden lifecycle

What is Vertex AI Model Garden?

Vertex AI Model Garden is a centralized hub inside Google Cloud’s Vertex AI platform that offers:

A catalog of 130+ pre-trained foundation models

Multi-modal support (text, image, video, code, etc.)

Built-in tools for prompt tuning, parameter-efficient fine-tuning (PEFT), and deployment

Integration with Google Cloud’s MLOps, BigQuery, and GCS

Whether you're building a chatbot, automating documents, summarizing videos, or classifying images, Model Garden provides ready-to-use components to speed up AI adoption.

Fine-Tuning with Vertex AI Model Garden

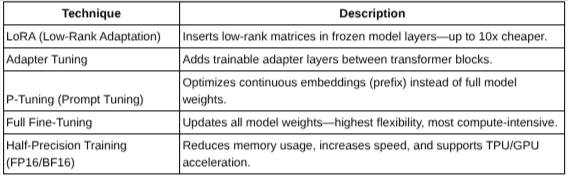

Fine-tuning foundation models with Model Garden allows precise adaptation for domain-specific tasks using efficient techniques like LoRA, Adapters, and P-Tuning. The process leverages Vertex AI’s managed infrastructure, making it suitable even with limited data or compute.

Supported Fine-Tuning Techniques:

Choosing a foundation Model in Vertex AI model garden:

Go to Model Garden in Vertex AI Console Or via cli type the command:

gcloud alpha ai models list --region=us-central1

Data Preparation for training:

Prepare a dataset in JSONL, CSV, or TFRecord format. Store it in Cloud Storage.

gs://your-bucket/fine-tune-data/train.jsonl

Sample format (for text summarization):

{"input": "Product details: sleek black shoes", "output": "Black stylish shoes"}

Monitor and Optimize Training

Track job in Vertex AI Console → Training > Custom Jobs

You can stream logs:

gcloud ai custom-jobs stream-logs <job-id>

Key Benefits:

Requires less data

Trains faster

Saves up to 90% in compute costs

Works with open models like Gemma, Mistral, etc.

Example: A retail company fine-tunes a text summarization model to tailor outputs based on their product data — using only 1,000 examples and completing training in under 2 hours.

Discover and Compare Models Instantly

Model Garden isn’t just about customization — it helps you choose the right model before you even begin. You can:

Filter models by modality (text, image, audio)

Sort by provider (Google, Meta, Cohere, etc.)

Preview input/output formats and task capabilities

Use try-it-now / Try in Vertex AI studio options to evaluate

No coding required to begin testing — just select a model, upload your input, and check the response.

No-Code & Low-Code Prompt Engineering

Sometimes, clever prompt design achieves 80% of what fine-tuning would — at 0% of the cost.

Model Garden provides built-in notebooks and tools for:

Designing few-shot or zero-shot prompts

Evaluating model performance

Saving prompt templates

Integrating into workflows

This lets teams validate AI use cases without jumping straight into training or deployment.

One-Click Deployment & API Integration

Once your model is ready, Model Garden lets you deploy to a Vertex AI Endpoint with just a few clicks. This means:

No manual provisioning

Scales automatically

Easily integrated into apps via REST API

Triggered by Cloud Functions, Workflows, or App Engine

This eliminates weeks of MLOps setup and lets you focus on solving real business problems.

Seamless MLOps for Real-World AI

Deploying a model is only the beginning. Vertex AI’s MLOps suite ensures your model:

Stays accurate (via retraining triggers)

Remains compliant (with audit trails)

Is observable (via logging & monitoring)

Can be rolled back or improved at any point

Everything is fully integrated into Google Cloud, so there's zero vendor lock-in and full control.

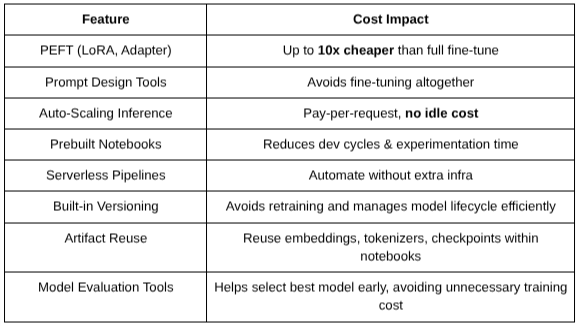

Cost-Saving Strategies with Model Garden

Here’s how Model Garden saves money and resources:

Outcome: You go from PoC to production without over-committing time, budget, or engineering resources.

Real-World Use Case example: Healthcare Chat Assistant

Goal: Create a chatbot for post-surgery recovery queries

Steps:

Selected MedPaLM model from Model Garden

Tuned with 500 example questions using LoRA

Deployed to the endpoint and embedded in the web app

Monitored feedback and used prompt tuning to improve accuracy

Result: Reduced patient calls by 40%, with model maintenance costs under $200/month.

Conclusion: From Models to Impact, Faster

The real value of Vertex AI Model Garden lies in its end-to-end support for the GenAI lifecycle:

Whether you're a startup building your first LLM app or an enterprise scaling AI across departments — Model Garden empowers you to build smarter AI, faster, and cheaper.