Mastering Vertex AI: End-to-End Model Training and Deployment for MLOps Success

Introduction to Vertex AI: The Unified ML Platform

Vertex AI represents Google Cloud's comprehensive machine learning (ML) platform, meticulously engineered to facilitate the training, deployment, and scaling of ML models and sophisticated AI applications. This platform also extends its capabilities to the customization of large language models (LLMs) for integration into AI-powered solutions.

In the realm of traditional ML development, practitioners often contend with a fragmented ecosystem, where distinct tools are employed for data preparation, model training, and deployment. This fragmentation frequently results in compatibility challenges and introduces significant operational complexities.3 Vertex AI directly addresses these pain points by offering a unified managed environment. It streamlines the entire MLOps lifecycle, allowing teams to channel their efforts into ML innovation and model development rather than expending resources on infrastructure management and integration complexities.

Why Choose Vertex AI? Key Features, Benefits, and Use Cases.

Vertex AI distinguishes itself through a rich array of features and compelling benefits that make it a preferred choice for organizations seeking to operationalize their ML initiatives.

Unified ML Platform: Train, deploy, and monitor models in one place.

AutoML + Custom Training: For both beginners and ML experts.

Built-in MLOps: CI/CD, model tracking, versioning, and monitoring.

Scalable Infrastructure: Managed compute, autoscaling, and load balancing.

Generative AI Ready: Fine-tune and deploy models like Gemini, Imagen, Codey.

Real Use Cases: Used in healthcare, finance, manufacturing, and retail.

Fast, Cost-Efficient, Secure: Cuts dev time, scales affordably, built for enterprise.

Model Training: Building Intelligence for Your Data

Overview of Training Methods: AutoML vs. Custom Training

Vertex AI provides two primary methodologies for model training: AutoML and Custom Training. The selection between these two approaches hinges on the specific project requirements, the available technical expertise, and the desired level of control over the model development process.

A. AutoML: AI for Everyone (Code-Free & Fast)

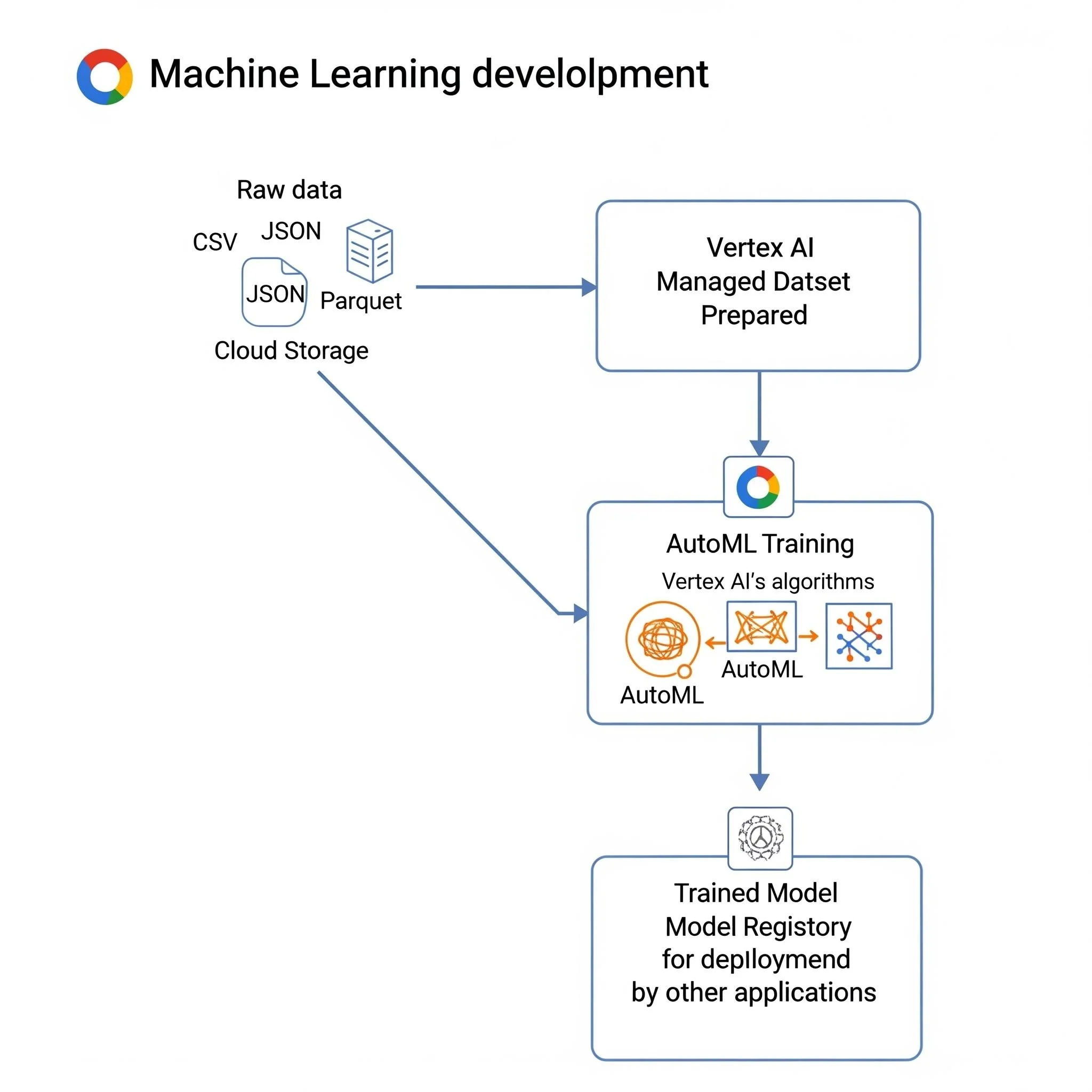

Concept: AutoML automates the entire model development process. You provide data (e.g., from Cloud Storage or BigQuery in common formats like CSV, JSONL, TFRecord), and Vertex AI intelligently builds the model for you.

Ideal for: Users with less ML expertise, rapid prototyping, and common tasks like classification, regression, and object detection.

Key Features: Automated data preparation, smart model selection, and efficient hyperparameter tuning. It leverages Managed Datasets for automatic data handling and splitting.

B. Custom Training: Full Control for Experts (Flexible & Powerful)

Concept: Bring your own custom code and preferred ML framework (e.g., TensorFlow, PyTorch , scikit-learn , XGBoost ). Run your training on scalable, managed infrastructure, often using data from Cloud Storage or BigQuery.

Ideal for: Experienced ML practitioners, complex problems, and projects requiring specific model architectures or advanced customization.

Key Features:

Containerized: Package your code in Docker containers for reproducibility. Use prebuilt containers for popular frameworks or create your own custom images.

Scalable Compute: Easily provision and scale CPUs, GPUs, or TPUs as needed.

Hyperparameter Tuning: Use Vertex AI Vizier to automatically optimize model performance.

Experiment Tracking: Log metrics and compare runs in Vertex AI Experiments for full traceability.

AutoML vs. Custom Model Training Comparison:

Model Deployment: Bringing Models to Life

Once trained, your model is ready to serve predictions. Vertex AI simplifies this critical step with flexible deployment options.

Online Prediction: Real-time & Low-Latency

Concept: Deploys your model to a dedicated Vertex AI Endpoint for real-time, low-latency predictions via a REST API.

Ideal for: Applications demanding immediate responses, such as recommendation engines, fraud detection, and interactive chatbots.

Key Features: Managed Endpoints: Vertex AI handles scaling, health checks, and load balancing automatically.

Autoscaling: Resources adjust dynamically based on incoming traffic.

Traffic Splitting: Safely test new model versions by directing a percentage of traffic to them (e.g., for A/B testing or canary rollouts).

Batch Prediction: High-Throughput & Asynchronous

Concept: Processes large volumes of data for predictions that don't require immediate responses. No persistent endpoint is needed, optimizing costs.

Ideal for: Offline tasks like nightly reports, large-scale data enrichment, or scoring entire datasets in a single operation.

Key Features:

Cost-Effective: Optimized for throughput, efficient for large, non-real-time tasks.

Flexible I/O: Input data from Cloud Storage or BigQuery, with results written back to your chosen location.

MLOps Best Practices and Automation with Vertex AI

Vertex AI is not merely a collection of ML tools; it is a platform built with MLOps principles at its core, enabling organizations to operationalize machine learning effectively and efficiently. Vertex AI is designed for robust MLOps, supporting automation and governance across your ML lifecycle.

Vertex AI Pipelines: Orchestrate your entire ML workflow (data ingestion, training, evaluation, deployment) into reproducible CI/CD pipelines.

Vertex AI Feature Store: A centralized repository to manage, share, and serve ML features, preventing inconsistencies and mitigating training-serving skew.

Model Monitoring: Continuously detect data drift, concept drift, and prediction skew to maintain model performance in production.

Continuous Integration/Continuous Delivery (CI/CD) for ML Pipelines

Manual ML processes are slow and error-prone, limiting fast experimentation.

Vertex AI Pipelines automates the full ML lifecycle:

Data preparation → Training → Evaluation → Deployment

Ensures workflows are reproducible and scalable

Supports orchestration frameworks like:

Kubeflow Pipelines

TensorFlow Extended (TFX)

CI/CD in ML goes beyond code:

Includes testing data, schemas, and models

Vertex AI enables Level 2 MLOps maturity:

Robust, automated CI/CD for ML in production

Typical CI/CD components integrated:

Source control, automated testing/builds, deployment services

Model Registry, Feature Store, ML metadata store

Benefits:

Faster iteration for data scientist

Higher reliability and stability through consistent automation

Clear roadmap for MLOps maturity and operational best practices

Table : Key MLOps Capabilities in Vertex AI

Costs, Quotas: Navigating Vertex AI Operations

Operating machine learning workloads on a cloud platform like Vertex AI requires a clear understanding of its pricing models, resource quotas, and common operational pitfalls. Proactive management of these aspects is crucial for cost optimization and ensuring smooth, uninterrupted MLOps workflows.

Understanding Vertex AI Pricing Models

Vertex AI operates on a flexible pay-as-you-use model, meaning organizations are billed only for the specific Vertex AI tools, services, storage, compute, and other Google Cloud resources consumed.

Training Costs: Charges for model training are primarily based on the machine type, the region where the training occurs, and any accelerators (GPUs, TPUs) utilized, billed per hour.

Prediction Costs: For both online and batch predictions, costs are incurred per node hour. A critical point to understand is that deploying a model to an online endpoint incurs charges as long as the endpoint is active.

Generative AI Pricing: Generative AI models are typically priced based on the volume of input (prompt) and output (response) tokens, measured per 1,000 characters, or on a per-image basis for image generation.

Key Takeaways & Best Practices: Operationalizing Success

To effectively leverage Vertex AI and achieve MLOps success, remember these critical points:

Permissions are Key: Always ensure your service accounts have the necessary IAM permissions for training jobs and model deployments to avoid silent failures.

Monitor Models: Don't just deploy and forget! Implement continuous Model Monitoring to catch performance degradation early.

Cost Management: Be mindful of costs. Undeploy idle online endpoints to stop charges, and consider using Spot VMs for training where possible.

Vertex AI empowers data scientists and developers to focus on building intelligent solutions, not managing complex infrastructure. By unifying the ML lifecycle, it accelerates time to value for your AI initiatives.

Resources for Further Exploration

Google Cloud Codelabs: Interactive, step-by-step tutorials to guide you through specific tasks and services. Explore Codelabs

Vertex AI Samples (GitHub): A rich repository of sample notebooks for AutoML, custom training, and more. View on GitHub

Official Vertex AI Documentation: The definitive source for technical details, API references, and conceptual overviews. Read Docs