Beyond the Break-Fix Cycle: Powering Intelligent Manufacturing with Google BigQuery

The 3:00 AM Production Crisis

The call comes in at 3:17 AM. It’s the Plant Manager at your primary Midwest facility. Line 4: critical for your highest-margin product line, is down hard. A key compressor seized.

The immediate reality is chaos. Maintenance teams are scrambling, consulting PDFs of manuals stored on a shared drive. Operations leaders are trying to calculate the impact on pending orders using spreadsheets that were last updated yesterday afternoon. The IT department is checking network logs, while the OT (Operational Technology) team is looking at local HMI panels.

Everyone is working hard, but they are working in the dark.

The frustration for a VP of Operations or CTO isn't just the downtime cost, which is amounting to tens of thousands of dollars per hour, it’s the realization that this failure wasn't sudden. The machine had been "telling" you it was sick for weeks. Vibration sensors showed subtle increases. Temperature readings had drifted outside optimal norms. Energy consumption had spiked slightly on the third shift.

But those signals were trapped on the shop floor, disconnected from the enterprise systems that could analyze them.

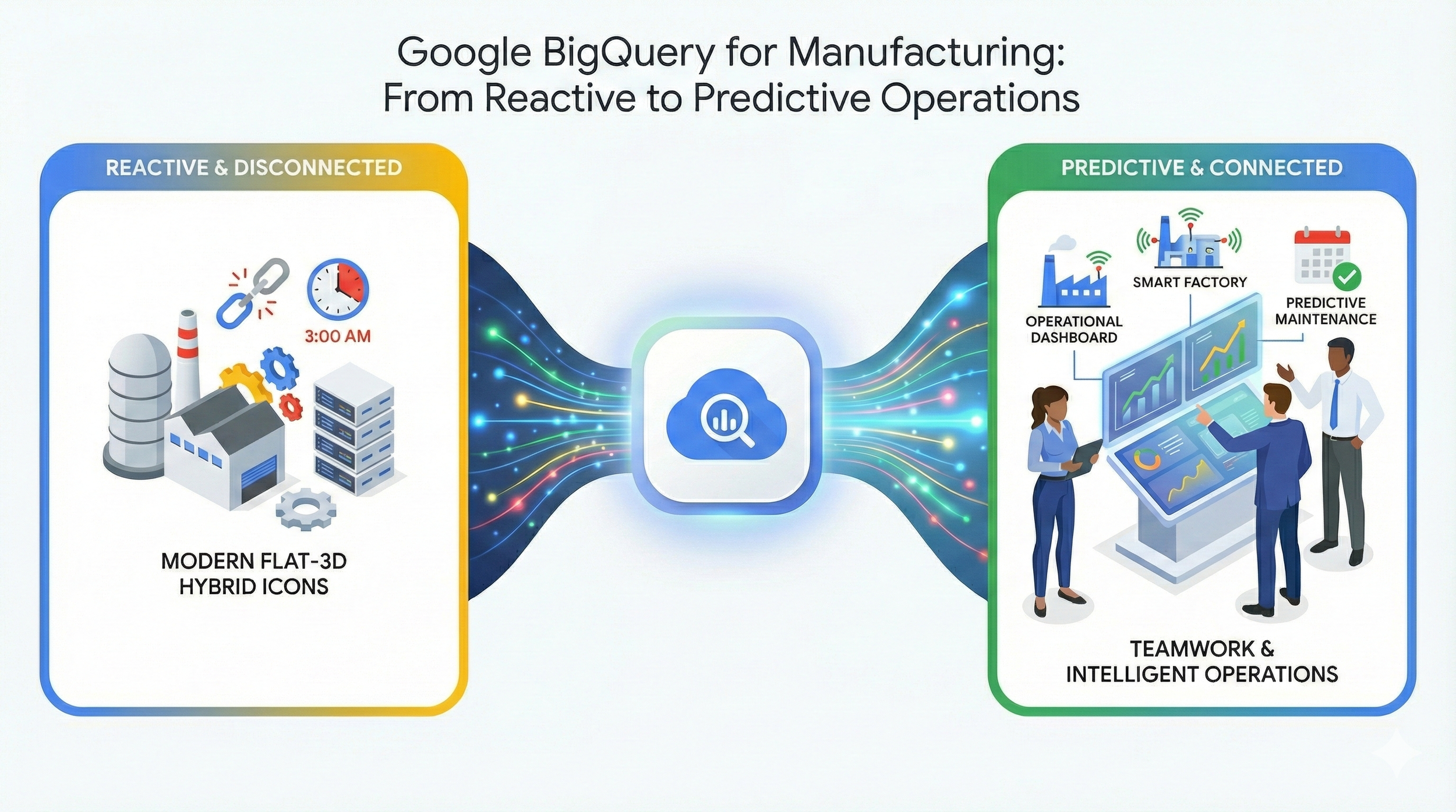

In modern manufacturing, the greatest risk isn't a lack of data; factories generate terabytes of sensor data daily. The risk is disconnected intelligence. When your physical operations (OT) and your digital enterprise (IT) speak different languages and live in different databases, you are perpetually stuck in reactive mode, fighting fires instead of preventing them.

This scenario is not an edge case. We see similar patterns across discrete & process manufacturers, automotive suppliers, industrial equipment makers, and regulated medical device plants; where equipment telemetry exists but remains isolated at the line or cell level.

In most environments, early warning signals are present weeks in advance, but the lack of a unified data foundation prevents teams from acting before failures cascade into downtime, scrap, or missed delivery commitments.

The shift to Industry 4.0 requires a foundational change in how industrial data is centralized and understood. This is the role of Google BigQuery: moving manufacturing leaders from reactive hindsight to predictive foresight.

The "Hidden Factory" and Data Opacity

Manufacturing doesn't suffer from a simple lack of reports; it suffers from a profound disconnect between the physical reality of production and the digital systems meant to manage it. This creates a "hidden factory" where inefficiencies and risks thrive unseen.

The Great IT/OT Divide

This is the defining data challenge of manufacturing. Operational Technology (SCADA systems, PLCs, historians) runs on proprietary protocols designed for millisecond-speed machine control, not analytics.

These environments typically rely on protocols such as OPC-UA, Modbus, or MQTT and are aligned to ISA-95 models, optimized for deterministic control and uptime, not historical analysis or cross-system correlation.

Information Technology (ERP, MES, CRM) runs the business side. Bridging these two worlds has historically been expensive, fragile, and incomplete. The result is that headquarters can see the financial result of a production run, but not the physical conditions that created it.

The Quality Forensics Nightmare

When a quality defect is discovered in the field, tracing the root cause is often a manual archaeological dig. Engineers must manually correlate paper batch records with digital historian logs, supplier lot numbers from the ERP, and operator shift notes. This process can take weeks, during which time the root cause, perhaps a slight humidity variance on a specific Tuesday afternoon, continues to create scrap.

Supply Chain Blind Spots

Manufacturers have decent visibility into their own inventory. The danger lies upstream. You might know your Tier 1 supplier is on schedule, but you have zero visibility into their suppliers (Tier 2 and 3). A port strike, a raw material shortage, or a geopolitical event three layers deep in your supply chain remains invisible until the moment your loading dock is empty, paralyzing production.

"Stranded" Asset Data

Your factory floor is loaded with smart machines, but that intelligence is often stranded locally. A CNC machine knows exactly how efficiently it's running (OEE), but if that data only lives on that machine's control panel, it cannot be compared against similar machines across your global footprint to identify best practices or systemic issues.

What BigQuery Actually Changes?

For manufacturing executives, Google BigQuery is not just a data warehouse; it is a universal translator for industrial operations. It fundamentally changes how you interact with your production environment.

1. It Handles the Velocity of IoT

Traditional databases choke on the volume and speed of sensor data, thousands of temperature readings, vibration frequencies, and pressure checks per second. BigQuery is designed for streaming ingestion at a massive scale. It allows you to land high-frequency OT data alongside transactional IT data without performance degradation.

2. The Convergence Point for IT/OT

BigQuery acts as the demilitarized zone where shop floor data finally meets top-floor data. By ingesting sensor feeds via Google Cloud’s Pub/Sub and Dataflow, and combining them with ERP tables from SAP or Oracle, you create a single, queryable reality. You can finally ask questions like, "Show me the correlation between machine temperature anomalies on Line 3 and product returns in the Northeast region last month."

3. Moving from "Run-to-Failure" to Predictive

You cannot predict machine failures using spreadsheets. BigQuery provides the necessary historical depth and computing power to train machine learning models (via Vertex AI or BigQuery ML) that recognize the subtle patterns preceding a breakdown. It shifts maintenance from a calendar-based cost center to a condition-based strategic advantage.

4. A Foundation for Digital Twins

A digital twin, a virtual replica of a physical asset or process, requires vast amounts of varied data to be accurate. BigQuery serves as the scalable backend for these initiatives, holding the historical state data, performance logs, and engineering specs that make a digital twin viable for simulation and optimization.

Manufacturing Use Cases: From Reactive to Resilient

How does unifying these disparate data sources translate into operational metrics like OEE (Overall Equipment Effectiveness), scrap reduction, and uptime?

1. True Predictive Maintenance (PdM)

The Situation: A manufacturer relies on schedule-based maintenance for critical stamping presses. They either replace parts too early (wasting money) or too late (causing catastrophic downtime).

Data in Play: High-frequency vibration sensor data, acoustic monitoring feeds, historical maintenance logs (from the CMMS), and machine age/cycle count data.

What BigQuery Enables: Data scientists train a BigQuery ML model to identify specific vibration signatures that indicate impending bearing failure. The system ingests live sensor data and scores it against the model in real-time.

The Impact: Increased OEE and reduced MRO spend. Maintenance teams receive alerts weeks before a failure, allowing them to schedule repairs during planned downtime rather than interrupting production.

2. Automated Quality Root Cause Analysis

The Situation: A medical device manufacturer experiences a spike in yield loss due to micro-cracks, but cannot pinpoint the cause across a complex, 20-step manufacturing process.

Data in Play: MES process logs (time, pressure, temperature at each station), supplier raw material lot codes, environmental sensor data (plant humidity/temp), and final quality test results.

What BigQuery Enables: By pooling all process data into BigQuery, engineers use Looker to visualize correlations. They discover a subtle relationship: the defects correlate tightly with a specific raw material batch only when plant humidity exceeds 55% during the curing stage.

The Impact: Rapid scrap reduction. The root cause is identified in hours, not weeks, allowing for immediate corrective action on environmental controls.

3. Dynamic Supply Chain Visibility

The Situation: An automotive supplier needs to know how a supplier delay in Asia will impact their ability to meet delivery-in-sequence commitments to an OEM customer next month.

Data in Play: ERP inventory levels, production schedules from the MES, 3PL shipping status feeds (APIs), and external risk signals (weather, port congestion data).

What BigQuery Enables: A supply chain "control tower" dashboard that links upstream supply shocks to downstream production impacts. The system can simulate scenarios: "If this container is delayed 5 days, which specific customer orders will be missed?"

The Impact: Improved customer service levels and agility. The company can proactively adjust production schedules or secure alternative freight, mitigating the impact on the customer.

4. Energy and Sustainability Optimization

The Situation: Energy costs are a major operating expense, and the company has aggressive carbon reduction goals.

Data in Play: Machine-level power consumption meters (IoT), production schedules, and real-time energy spot market pricing feeds.

What BigQuery Enables: The platform analyzes energy usage patterns against production needs. It identifies opportunities to shift energy-intensive processes (like firing up furnaces) to times of day when energy spot prices are lowest, or when grid carbon intensity is lower.

The Impact: Direct cost reduction and progress toward sustainability targets.

Recommended Read: Unlocking the Power of Data Analytics with Google Cloud's BigQuery

High-Level Architecture: The Connected Factory Hub

For OT and IT leaders, the goal is an architecture that respects the need for shop-floor speed while enabling cloud-scale intelligence. BigQuery is the convergence hub.

The Flow of Industrial Intelligence:

The Edge (The Physical World):

Sensors, PLCs, and SCADA systems generate data.

IoT Edge devices or gateways collect this data locally, performing initial filtering.

Ingest (The Bridge):

Streaming OT Data: High-velocity sensor data is streamed securely to Google Cloud using Pub/Sub, which decouples machine data producers from downstream analytics consumers and supports bursty, event-driven industrial workloads.

Batch IT Data: Data from ERP, MES, and Quality systems is replicated into the cloud using tools like Datastream or Dataflow.

Store & Model (The Brain):

All data lands in BigQuery.

Raw sensor logs are stored cheaply in time-partitioned tables.

This OT data is joined with IT context (e.g., joining a sensor ID with an asset ID from the ERP) to create unified data models.

Analyze & Act (The Value):

Looker: Provides real-time OEE dashboards for plant managers and cross-plant comparisons for executives.

Vertex AI/BigQuery ML: Runs predictive models on the historical data to forecast failures or optimize setpoints.

Feedback Loop: Insights can be pushed back to the shop floor HMI or MES to guide operators.

From Quick Wins to Smart Factory Strategy

Modernizing manufacturing data is complex due to the mix of brand-new machines and decades-old legacy equipment. We recommend a pragmatic, agile approach:

Phase 1: Connect the Unconnected (Weeks 1-12): Pick one critical bottleneck machine or line that is currently opaque. Retrofit necessary sensors or tap into existing controllers. Stream that data to BigQuery and build a basic health monitoring dashboard. Prove value through reduced downtime on that single asset.

Phase 2: Contextualize and Scale (Months 3-9): Expand to similar asset classes across the plant. Crucially, begin joining this sensor data with MES/ERP data to understand the context of machine performance (e.g., performance by product SKU or operator).

Phase 3: Predictive and Autonomous (Month 9+): With a rich historical dataset in BigQuery, begin training predictive models for maintenance and quality. Explore using Gemini to allow maintenance staff to query technical documentation using natural language.

Recommended Read: The Benefits of Migrating to Google Cloud: A Comprehensive Guide

Why Evonence + Google Cloud for Manufacturing

Manufacturing data projects often fail because partners understand the cloud, but they don't understand the shop floor. They don't know a PLC from a VFD.

As a Premier Google Cloud Partner, Evonence bridges this gap.

IT/OT Fluency: We understand the unique challenges of extracting data from industrial protocols and legacy historians. We know that shop floor network security is different from office network security.

Real-Time Architecture: We have deep expertise in architecting streaming pipelines using Pub/Sub and Dataflow that can handle the crush of industrial IoT data.

From Visibility to AI: We don't just build dashboards; we prepare your data for advanced applications like predictive maintenance and Gemini-powered operational assistants.

We help you turn a collection of disconnected machines into an intelligent, unified enterprise.

The End of Reactive Operations

In a global market defined by tight margins and complex supply chains, you can no longer afford to run your factories based on hindsight. The data required to optimize production, predict failures, and ensure quality exists today, it’s just trapped in disconnected systems.

Google BigQuery provides the architecture to liberate that data, converging the physical and digital worlds to create a truly connected factory.

Are you ready to stop reacting to breakdowns and start predicting them?

Next Steps

Move from "break-fix" to intelligent operations.

Schedule an "IT/OT Convergence Workshop": Let’s assess your current shop floor connectivity and identify the highest-value assets for a pilot data project.

Request a Smart Factory Readiness Assessment: Our architects will review your data landscape and outline a roadmap to a predictive Google Cloud foundation.